My Cloud Resume Challenge Journey

Hello! And welcome to my first blogpost ever, I'm not used to write online content so please bear with me.

I am writing this post as final step of the Cloud Resume Challenge, invented by Forrest Brazeal.

You can read more about it here,

but basically you have to build your resume as a website using a lot of cloud technologies.

In this post, I'll explain what my infrastructure looks like and highlight the steps that I'm proud of, or where I struggled the most.

You can see the source code for the challenge in my Gitlab repo.

Why did I attempt the challenge?

I had been thinking about creating a dummy app with a complete CI/CD pipeline for a while now, both for learning and portfolio purposes, but I never found the motivation or a project interesting enough to start out... Until I came across a Reddit post talking about the Challenge, and I thought this was a great idea, worst-case scenario: I have a brand-new resume, best-case scenario: I learn new skills, and I can back it up with a good-looking project.

The challenge

I'm going to make a quick recap of what the challenge is about in case you didn't check the links above. We can divide it in 5 chunks:

- Pass a cloud certification.

- Build a website and host it in a cloud provider.

- Create an API to count visitors.

- Automate the deployments, tests, integration, etc.

- Write a blogpost about it and share it to the world.

Cache Invalidation

Since the website is hosted behind a CDN, you have to invalidate the cache when you make a modification to it otherwise the user may not see the latest version.

I'm happy of what I did regarding the cache invalidation. It's not really much, but it matches my needs.

I choose to use a Lambda function triggered by S3 events instead of invalidating the cache from the pipeline, this way:

- I don't have to give cloudfront permission to the pipeline role (The less the better right ?)

- If the files are modified by another source, cache still gets invalidated.

Authentication Process

It took me a bit of time to figure out an authentication process that I was happy with. Of course hardcoded secrets were not a solution, but I didn't want to set up a vault for this either.

Gitlab environment variables were a good solution, but I didn't like the idea of storing credentials for all the environments in this place, nor having them propagated in every pipeline.

Also, while passing the certification a lot of the courses mentioned short-timed credentials and how we should try to use them as much as possible therefore I wanted to give it a try.

I came up with a solution that looks a bit like a bastion: you connect to a central account and then assume roles to the other accounts where you need to work.

- The pipeline connects to AWS using OIDC.

- The admin connects using SSO.

- In both cases, terraform then assumes the correct role based on the environment.

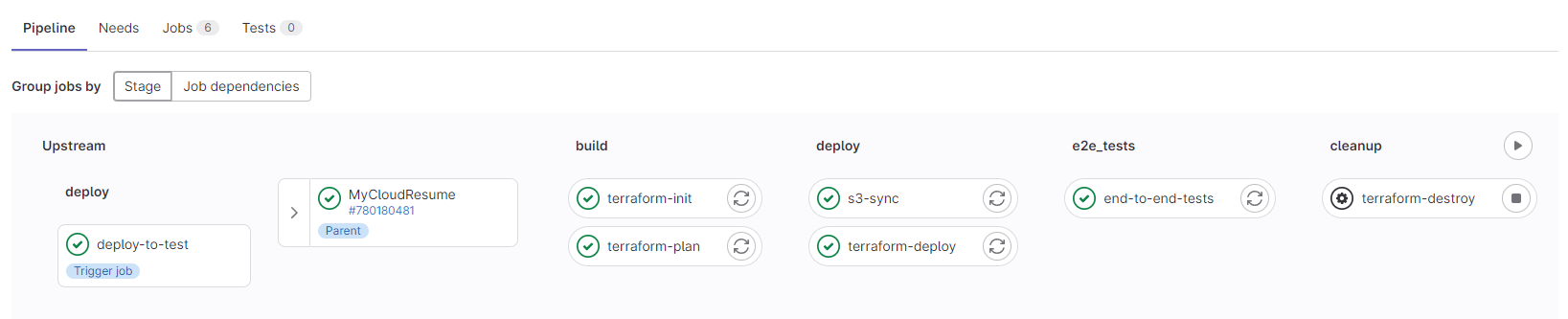

CI/CD Pipeline

Regarding the pipeline, I wanted to use the same code for all the environments, and keep the ability to run the code from the command line if needed.

I also wanted the differences between environments to be managed in one place and not spread all over the repository.

Finally, I wanted to be able to deploy multiple independent instances of the infrastructure in the same account.

And of course, no credentials were to be hardcoded or stored as artifacts.

The solution I came up with is:

- The pipeline authenticates using OIDC and then assumes roles as needed.

- All parameters are passed as pipeline variables.

- The same CI files are included multiple times using Gitlab's Trigger feature.

- Terraform state files are stored in a private S3 bucket to be accessed outside of the pipeline if needed.

- A dynamic backend file is built for terraform (because you can't use variables inside the backend config).

- Test environments are prefixed with the short commit sha.

- CI deployments are managed through Gitlab's dynamic environment feature.

- CI deployments are automatically cleaned-up with no additional actions after a given amount of time.

And now?

That's it! I've checked all the boxes of the Cloud Resume Challenge!

Although I still have a lot of ideas to improve the website, the pipeline or the overall security of the project, I'll try to stick to the "Done is better than perfect" concept for now.

Overall, this project was a great experience! I really enjoyed getting hands-on experience with AWS and terraform

and I can't wait to use those new skills in my next mission